Navigating the Future of AI in Medicine - A Deep Dive into Medical Large Language Models

Paper info: Li, Q., Liu, H., Guo, C., Gao, C., Chen, D., Wang, M., … & Gu, J. (2025). Reviewing Clinical Knowledge in Medical Large Language Models: Training and Beyond. Knowledge-Based Systems, 114215.

A comprehensive guide to the latest developments in clinical knowledge integration for AI systems

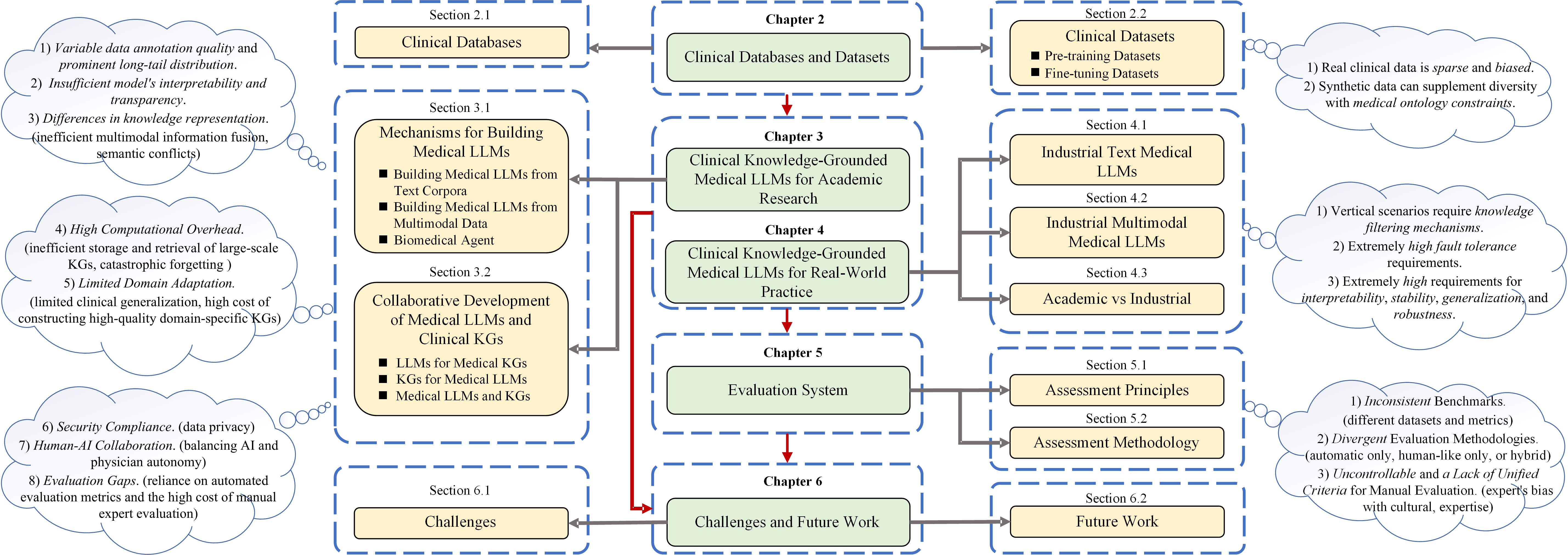

The intersection of artificial intelligence and healthcare has reached a pivotal moment. A groundbreaking survey published in Knowledge-Based Systems provides the most comprehensive analysis to date of how clinical knowledge is being integrated into large language models (LLMs) for medical applications. After reviewing over 160 papers, the research reveals both remarkable progress and significant challenges in building AI systems that can truly understand and apply medical expertise.

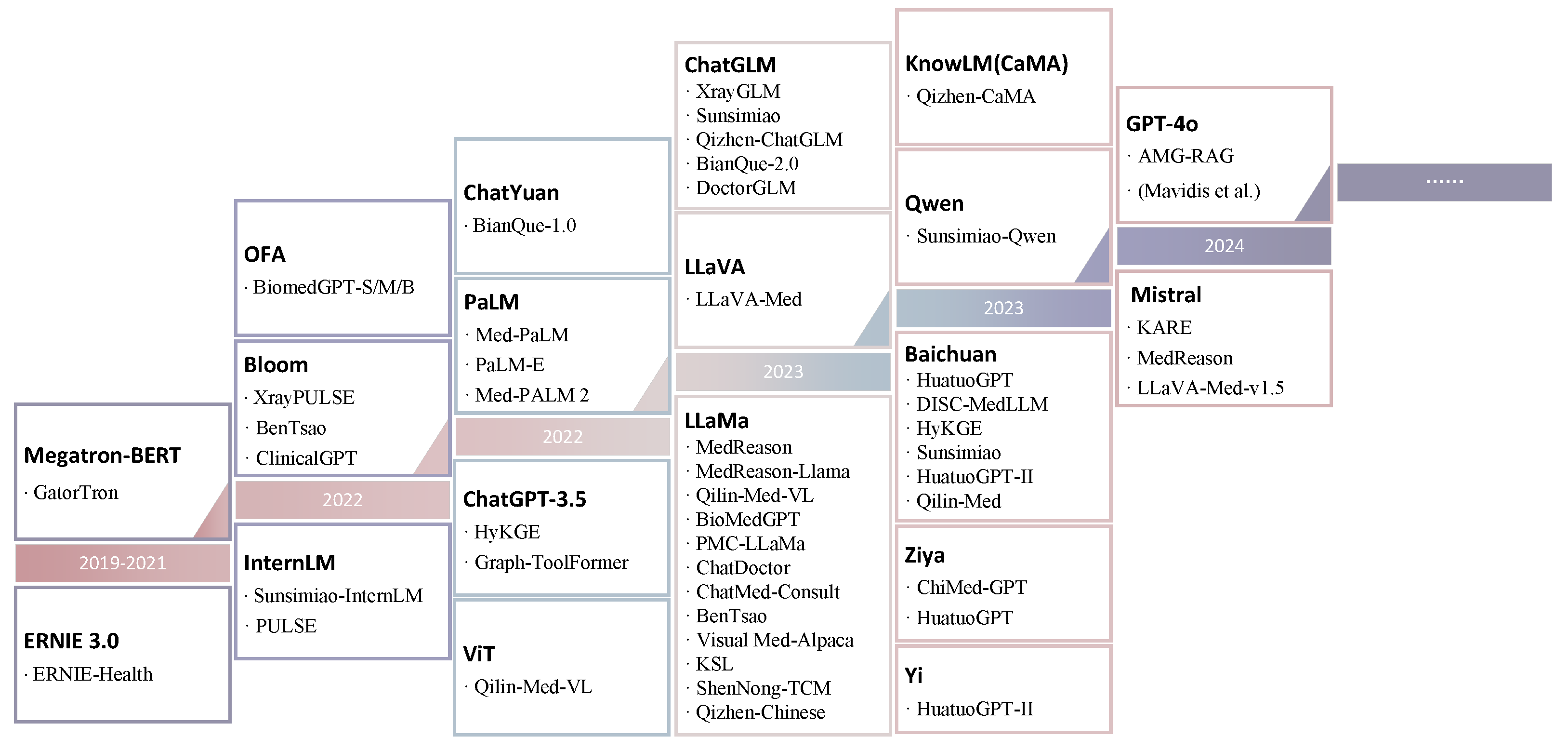

The Medical AI Revolution: Beyond ChatGPT

While general-purpose AI models like ChatGPT have captured public attention, the medical field demands something far more sophisticated. Medical LLMs aren’t just about processing text—they need to understand complex clinical relationships, interpret medical images, and provide traceable, evidence-based recommendations that healthcare professionals can trust.

The survey reveals three critical dimensions that define the current landscape:

- Task diversity: From diagnosis and prediction to patient management across different medical specialties

- Model architecture: Including text-only models, multimodal systems, and knowledge graph-enhanced approaches

- Data complexity: Spanning public datasets, specialized medical corpora, and real-world clinical data

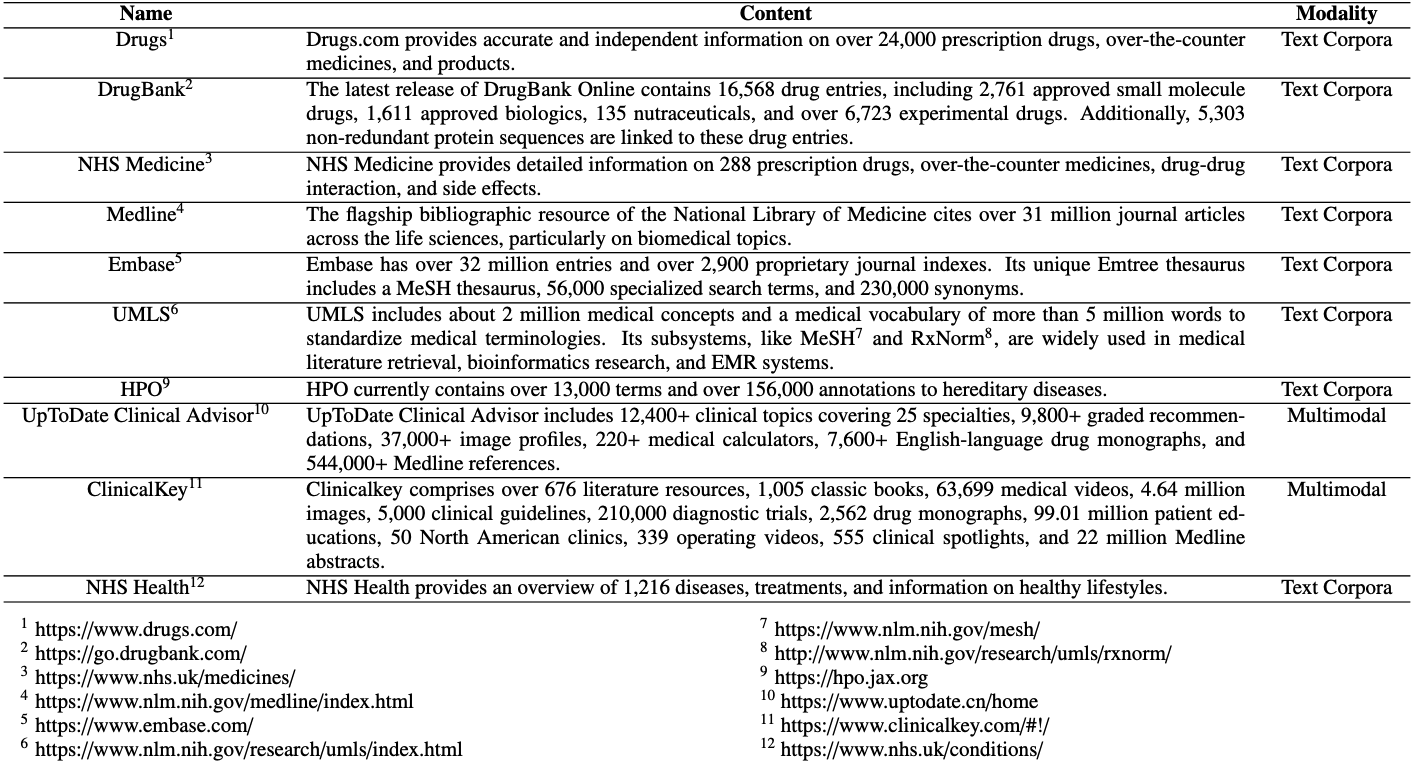

The Foundation: Clinical Data and Knowledge Bases

Rich but Fragmented Data Landscape

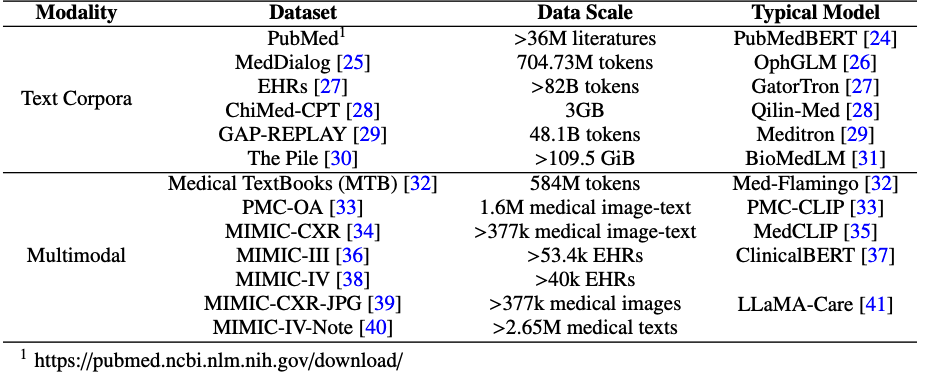

One of the most striking discoveries is the sheer diversity of clinical data sources now available. The research catalogs everything from massive text corpora like PubMed (over 36 million articles) to specialized multimodal datasets combining medical images with clinical notes.

However, a critical gap emerges: most datasets lack multilingual support. While English and Chinese resources dominate, healthcare AI remains largely inaccessible to practitioners and patients speaking other languages. This represents both a significant limitation and an opportunity for future development.

The Pre-training vs Fine-tuning Divide

The survey reveals a sophisticated ecosystem of dataset usage:

Pre-training datasets focus on broad medical knowledge acquisition:

- Text corpora from medical literature and clinical records

- Multimodal data combining images with textual descriptions

- Massive scale (some datasets exceed 48 billion tokens)

Fine-tuning datasets target specific medical tasks:

- Medical examinations and board certifications

- Clinical question-answering scenarios

- Specialized tasks like medical image analysis and report generation

Building Medical Intelligence: Four Paradigmatic Approaches

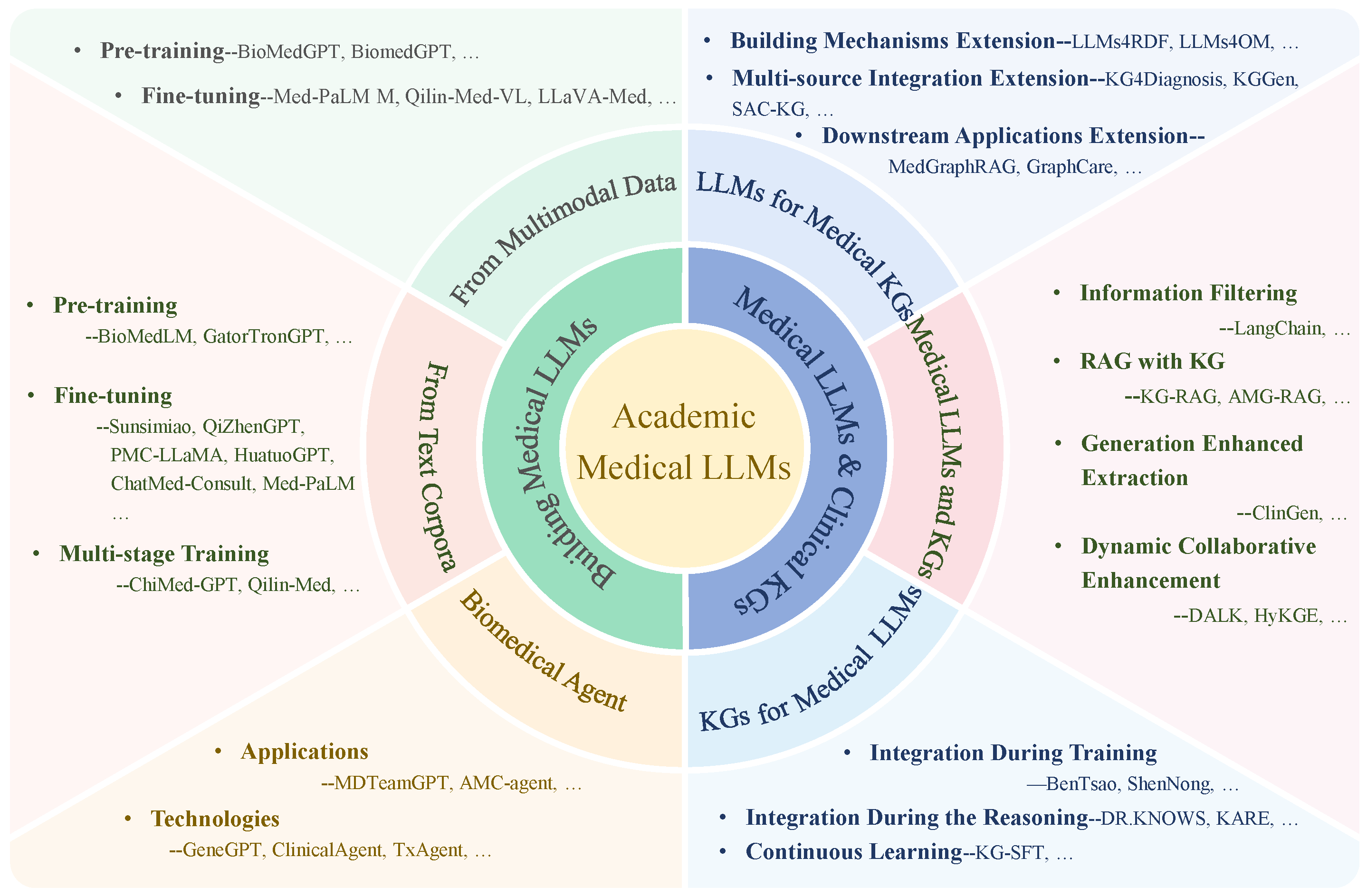

1. Text-Based Medical LLMs: The Foundation

The journey begins with text-only models that learn from vast medical corpora. Models like BioMedLM and GatorTronGPT demonstrate that domain-specific pre-training can achieve remarkable results:

- BioMedLM: Achieved 50.3% accuracy on medical board exams (MedQA-USMLE)

- GatorTronGPT: Generated text indistinguishable from human physicians in clinical relevance

The research identifies three primary training strategies:

- Pre-training only: Building from scratch on medical data

- Fine-tuning only: Adapting general models to medical tasks

- Multi-stage training: Combining pre-training, supervised fine-tuning, and reinforcement learning

2. Multimodal Medical LLMs: Seeing and Understanding

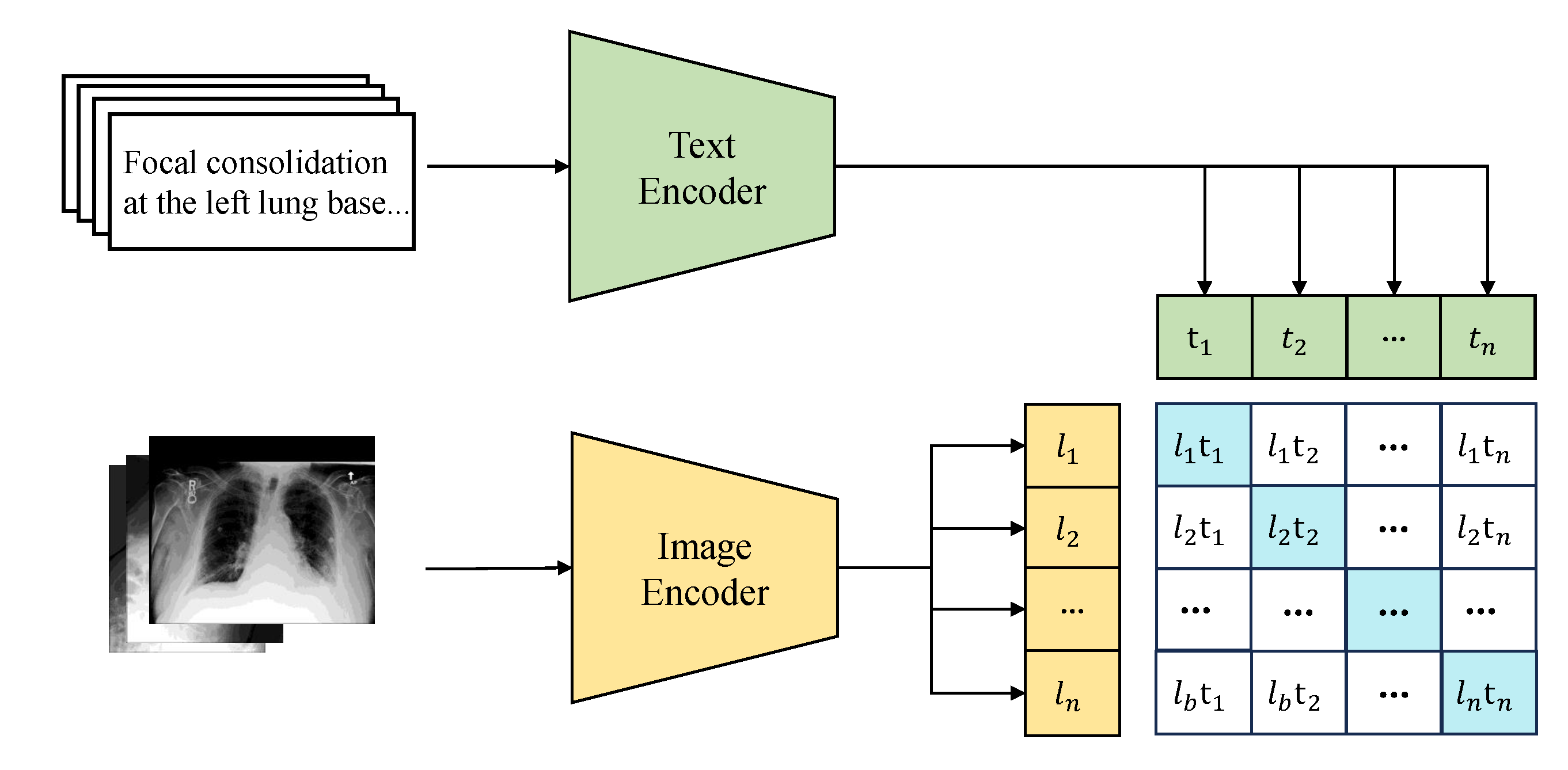

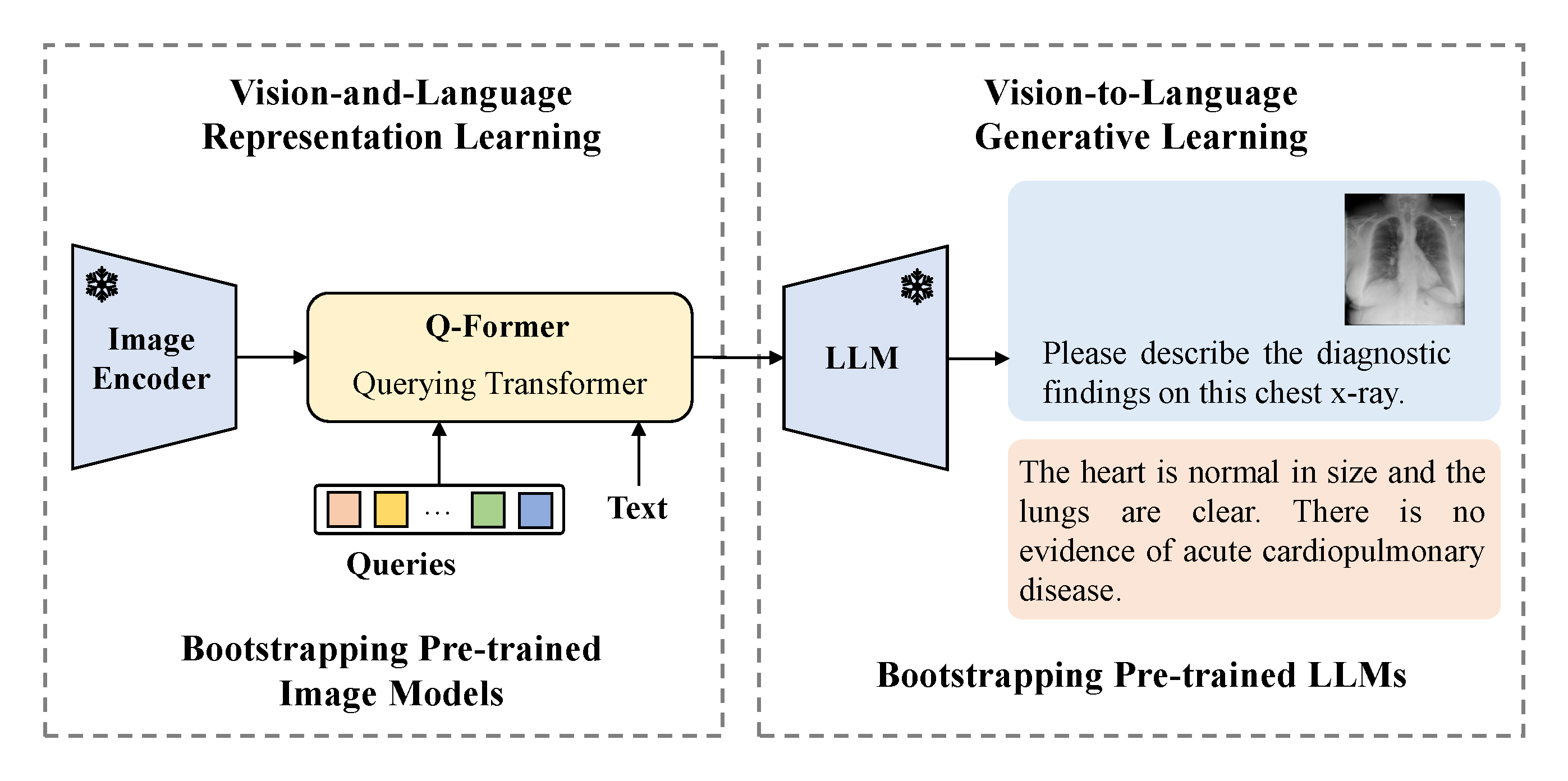

Perhaps the most exciting development is the emergence of multimodal systems that can process both text and medical images. The survey identifies three distinct approaches:

Contrastive Learning: Teaching models to align medical images with textual descriptions

End-to-End Training: Processing multiple modalities through unified architectures

Prompt Combination: Linking pre-trained components through clever prompt design

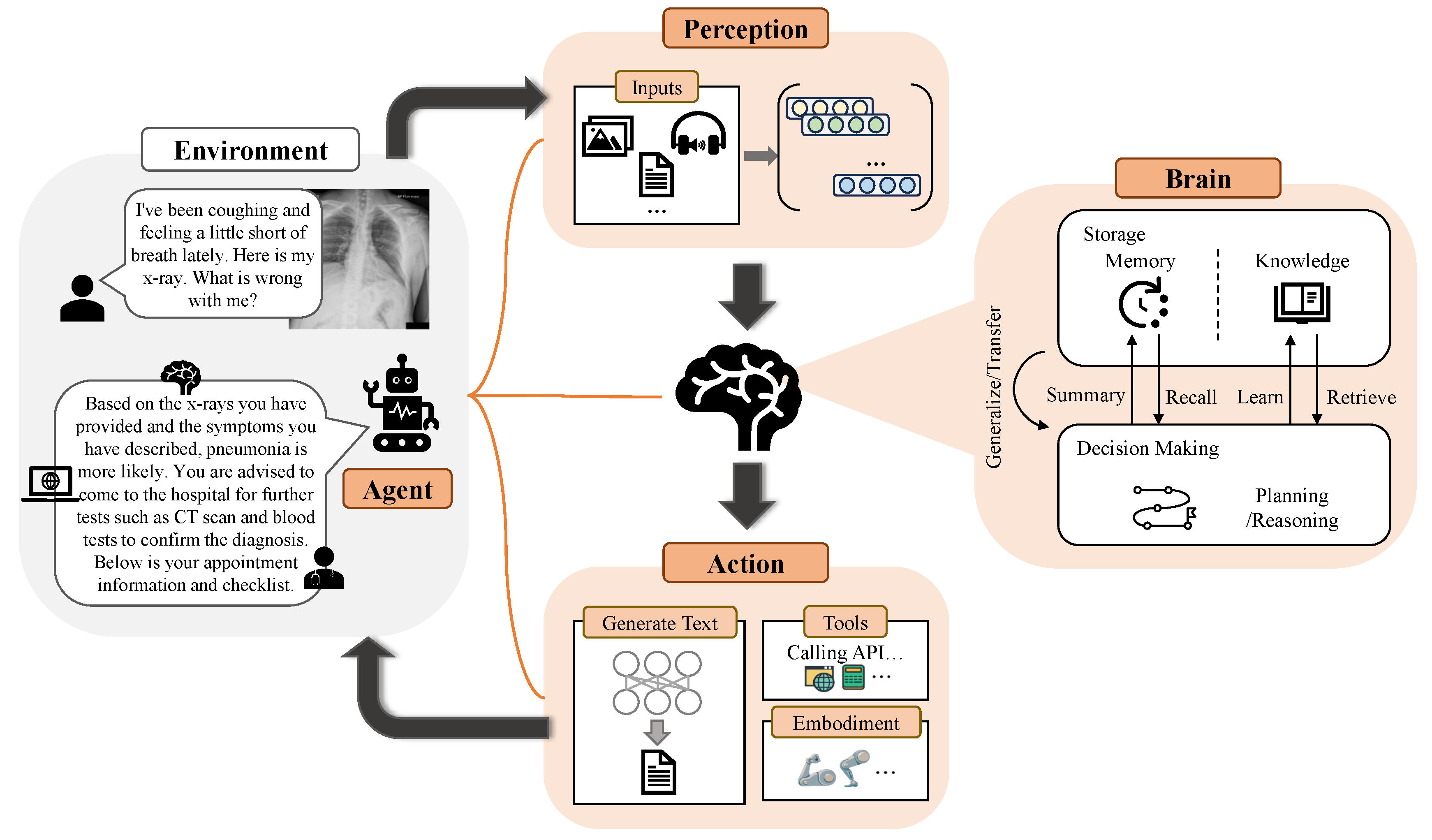

3. Agent-Based Systems: AI That Can Act

The frontier of medical AI extends beyond passive question-answering to active problem-solving through agent-based systems. These LLM-powered agents can:

- Perceive clinical environments through sensors

- Make decisions based on medical reasoning

- Take actions using specialized medical tools

Current applications span from autism social skills training to intelligent diagnostic assistance, representing a shift toward “collective intelligence-driven productivity transformation.”

4. Knowledge Graph Integration: Structured Medical Reasoning

One of the most promising developments is the integration of medical knowledge graphs (KGs) with LLMs, addressing critical limitations like hallucination and interpretability. The research identifies three paradigms:

- LLMs for Medical KGs: Using language models to build and maintain medical knowledge bases

- KGs for Medical LLMs: Enhancing language models with structured medical knowledge

- Collaborative Systems: Dynamic interaction between knowledge graphs and language models

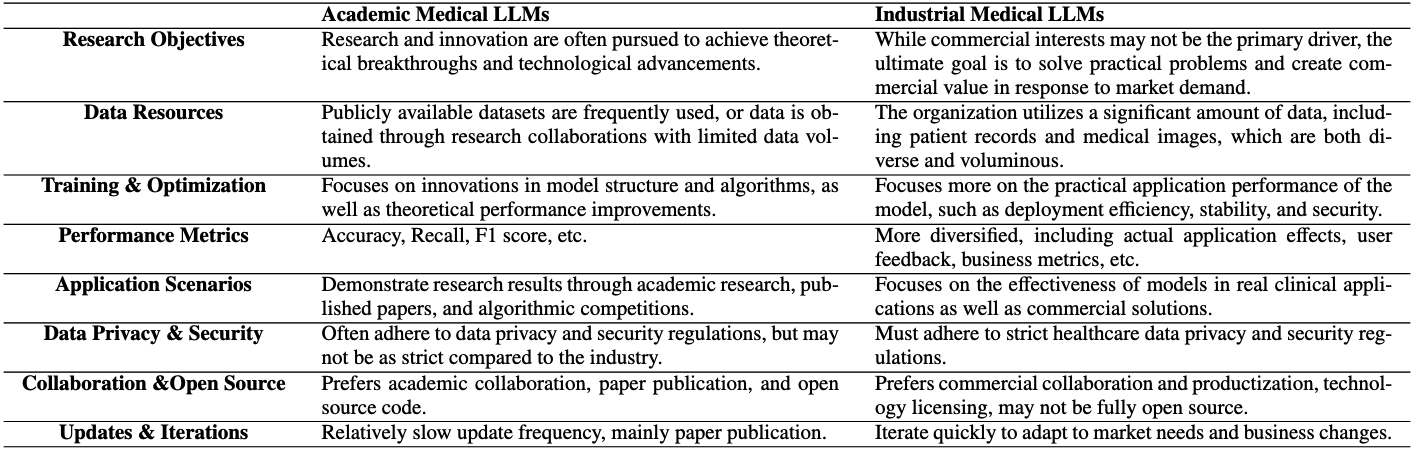

From Lab to Clinic: The Academic-Industrial Divide

Academic Innovation vs. Real-World Application

The survey reveals a fascinating tension between academic research and industrial implementation. While academic models focus on theoretical breakthroughs and algorithmic innovation, industrial systems prioritize practical deployment and regulatory compliance.

Key differences include:

- Data sources: Public datasets vs. proprietary clinical data

- Training focus: Algorithmic innovation vs. deployment stability

- Evaluation metrics: Research benchmarks vs. real-world effectiveness

- Regulatory requirements: Academic freedom vs. clinical compliance

Industrial Success Stories

The research documents remarkable progress in commercial medical AI:

- Traditional Chinese Medicine: Systems like DaJing integrate ancient medical wisdom with modern AI

- Multimodal Platforms: Companies are building comprehensive health management systems

- Specialized Applications: From ophthalmology (EyeGPT) to drug discovery (PanGu)

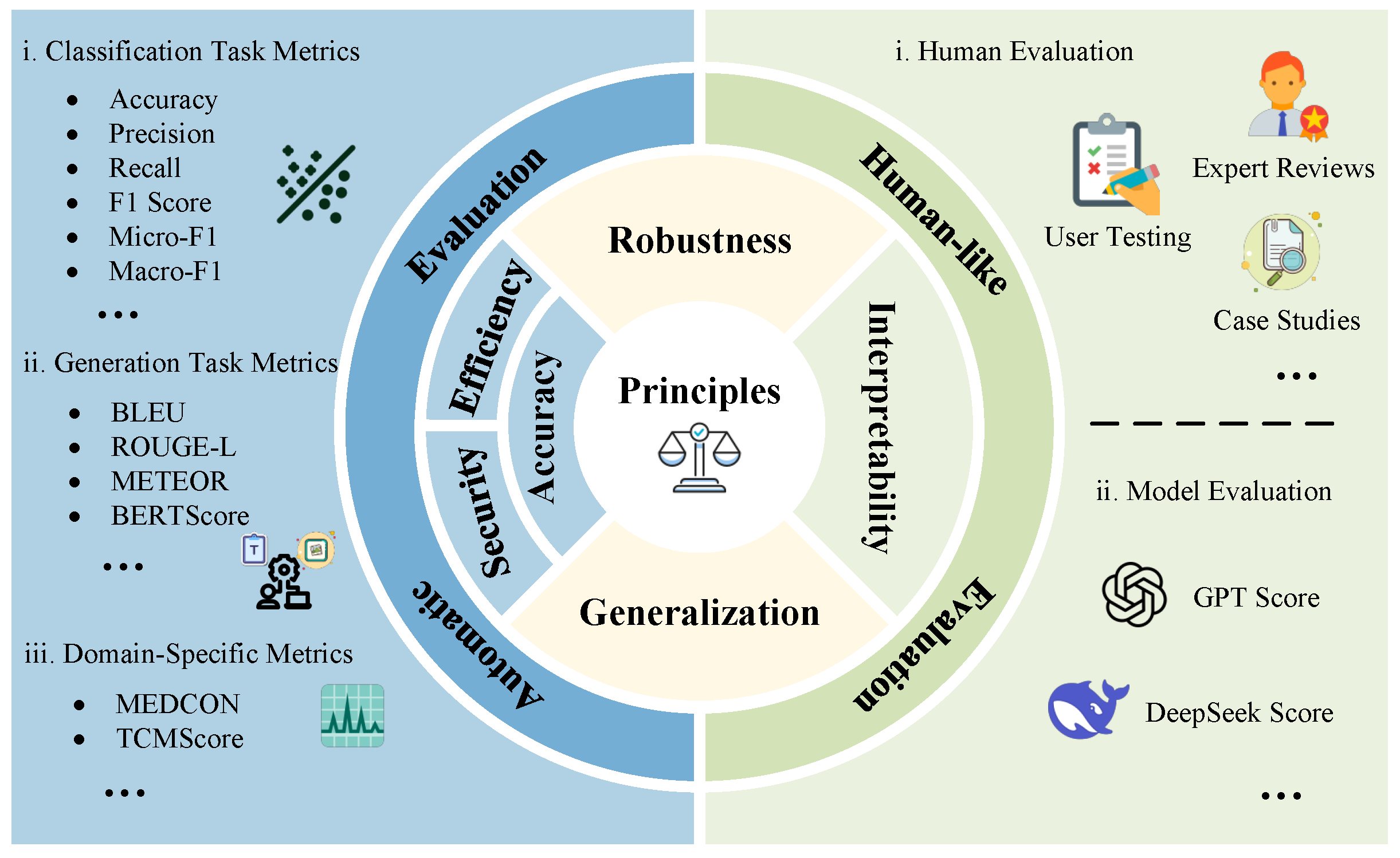

The Evaluation Challenge: Measuring Medical AI

Beyond Accuracy: A Multi-Dimensional Framework

One of the survey’s most important contributions is highlighting the inadequacy of current evaluation methods. Medical AI requires assessment across six critical dimensions:

- Accuracy: Correctness of medical predictions

- Robustness: Performance under varied conditions

- Generalization: Effectiveness on unseen data

- Interpretability: Explainability of decisions

- Efficiency: Computational and time requirements

- Security: Protection against malicious use

The Human Factor

The research emphasizes that automated metrics alone are insufficient. Human evaluation—including expert reviews, user testing, and case studies—remains essential for assessing medical AI systems. However, this creates challenges:

- Dataset inconsistency: Different models evaluated on different benchmarks

- Metric variability: No standardized evaluation protocols

- Subjective bias: Human evaluation systems vary widely

Critical Challenges and Future Directions

The Current Limitations

Despite remarkable progress, the survey identifies persistent challenges:

- Data Scarcity: Limited multilingual and rare disease coverage

- Model Opacity: Difficulty in explaining AI decisions to clinicians

- Evaluation Gaps: Narrow metrics that don’t capture clinical utility

- Integration Issues: Challenges in combining knowledge graphs with language models

The Path Forward

The research outlines several promising directions:

Technical Advances:

- Multi-source entity alignment for better knowledge integration

- Multimodal fusion with causal reasoning capabilities

- Domain-aware retrieval and adaptive calibration systems

Evaluation Innovation:

- Multilingual and multimodal evaluation datasets

- Multi-dimensional assessment frameworks

- Clinically-meaningful evaluation beyond simple accuracy

Human-Centered Design:

- Enhanced transparency and interpretability

- Better integration with clinical workflows

- Attention to patient experience and cross-cultural communication

Implications for Healthcare’s Future

The Democratization of Medical Knowledge

Perhaps the most profound implication is the potential for medical AI to democratize access to healthcare expertise. By encoding clinical knowledge in accessible AI systems, we could extend high-quality medical reasoning to underserved populations and resource-limited settings.

The Evolution of Medical Practice

The survey suggests we’re witnessing the early stages of a fundamental transformation in medical practice. Rather than replacing physicians, these systems are evolving toward collaborative intelligence—augmenting human expertise with AI capabilities while maintaining clinical autonomy and judgment.

Regulatory and Ethical Considerations

The research emphasizes that technical advances alone are insufficient. Success requires addressing:

- Data privacy and security in clinical settings

- Regulatory compliance across different healthcare systems

- Ethical considerations in AI-assisted medical decision-making

- Cultural sensitivity in global healthcare applications

Conclusion: Charting the Course Ahead

This comprehensive survey reveals a field in rapid transition. While significant challenges remain—from evaluation standardization to multilingual support—the trajectory is clear: medical AI is moving from experimental curiosity to clinical reality.

The most successful future systems will likely combine multiple approaches: leveraging both structured knowledge graphs and flexible language models, integrating multimodal data while maintaining interpretability, and balancing automation with human oversight.

For researchers, clinicians, and policymakers, the message is clear: the tools and knowledge exist to build more effective medical AI systems. The challenge now is coordinating efforts across disciplines, cultures, and healthcare systems to realize this potential responsibly and equitably.

As we stand at this inflection point, one thing is certain: the future of medicine will be deeply intertwined with artificial intelligence. The question isn’t whether AI will transform healthcare, but how quickly and effectively we can guide that transformation to benefit patients worldwide.

This analysis is based on “Reviewing Clinical Knowledge in Medical Large Language Models: Training and Beyond” by Li et al., published in Knowledge-Based Systems (2025). The survey reviewed 160+ papers and provides the most comprehensive analysis to date of clinical knowledge integration in large language models.

Citation:

1 | @article{LI2025114215, |

- Title: Navigating the Future of AI in Medicine - A Deep Dive into Medical Large Language Models

- Author: Haijiang LIU

- Created at : 2025-08-14 11:00:00

- Updated at : 2025-10-05 11:02:10

- Link: https://github.com/alexc-l/2025/08/14/med-llms/

- License: © 2025 Elsevier B.V. All rights are reserved, including those for text and data mining, AI training, and similar technologies.